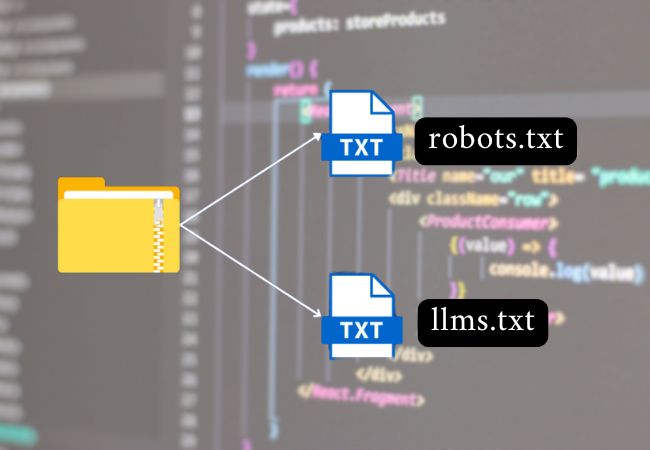

An llms.txt file is similar to robot.txt files, which looks after search engine crawling and indexing, but llms.txt is for giving instructions to AI bots. It is a text file for AI models or LLMs (Large Language Models), like ChatGPT or Google Gemini, to interact with websites. It is used to control the access to websites’ content through AI.

Why is llms.txt important?

1. Limited by context window:

Processing the whole website at once and passing to LLM as context does not work because of the context window limitation

2. HTML Complexity:

It is hard to extract meaningful text from ad-filled and JavaScript-intensive websites.

3. Data Formatting:

Incomplete or unrelated data is extracted since crawlers tend to misunderstand website structure.

What is the advantage of llms.txt?

1. Gives one source of truth:

A well-organized markdown file (/llms.txt) provides clear background information and links to LLM-optimized pages directly.

2. Standardizes AI Access to Websites:

LLMs can use machine-readable markdown (.md) copies of web pages instead of Scraping dynamic HTML.

3. Simplifies AI Indexing:

Sites are able to explicitly define what content is most important (e.g., API documentation, knowledge bases) without AI models having to infer this.

|

Feature |

llms.txt |

robots.txt |

|

Purpose |

Controls access for LLM (Large Language Models) like ChatGPT, Gemini, etc. |

Controls access for search engine crawlers like Googlebot, Bingbot, etc. |

|

Targeted Agents |

AI models (User-agent: GPTBot, Gemini, etc.) |

Search engines (User-agent: Googlebot, Bingbot) |

|

File Location |

Root directory (/llms.txt) |

Root directory (/robots.txt) |

|

Goal |

Knowledge presentation through Artificial intelligence optimization |

To make pages discoverable easily through crawlable structure within the scope of search engine optimization. |

|

Primary Use Case |

Manages AI model interaction with web content |

Manages search engine indexing and crawling |

|

Privacy Focus |

Helps prevent AI from accessing sensitive or proprietary content |

Prevents search engines from indexing restricted pages |

|

Impact on SEO |

No direct impact on SEO or rankings |

Directly impacts SEO by blocking or allowing pages |

|

Adoption |

Newly introduced for AI compliance |

Widely adopted standard for search engines |

|

Example User-Agent |

User-agent: OpenAI-GPTBot |

User-agent: Googlebot |

|

Contact Info Option |

Includes contact info (Contact: field) for AI-related queries |

No dedicated contact field |

Step-by-Step Guide to Create an llms.txt File for “Your New Site”

1. Start with an H1 Heading:

Use the # symbol to create the first line as an H1 heading.

Write the name of your website.

Example:

# Your New Site

2. Add a Brief Description:

On the next line, add an information block in quote format using the > symbol.

Briefly describe your website in 1-3 sentences.

Example:

> Welcome to Your New Site! Discover valuable content and insights.

3. Create Logical Blocks:

Use H2 headings (##) to create separate sections for different content categories.

Name each block clearly, such as:

- Main Documents

- Products

- Resources

Example:

## Main Documents

4. Add Links and Descriptions:

For each block, include relevant links with brief descriptions.

Use the format:

[Document Name] (URL): Short description.

Example:

– [About Us](https://yournewsite.com/about): Learn about our mission and values.

– [Services](https://yournewsite.com/services): Explore our service offerings.

5. Add Additional Sections (Optional):

Include extra sections such as:

- Examples

- Privacy Policy

This provides more information for LLMs.

Final Example:

# Your New Site

> Welcome to Your New Site! Discover valuable content and insights.

## Main Documents

– [About Us](https://yournewsite.com/about): Learn about our mission and values.

– [Services](https://yournewsite.com/services): Explore our service offerings.

## Products

– [Product Catalog](https://yournewsite.com/products): Browse our product range.

– [New Arrivals](https://yournewsite.com/new): Discover our latest offerings.

## Resources

– [Blog](https://yournewsite.com/blog): Read expert articles and tips.

– [FAQ](https://yournewsite.com/faq): Find answers to common questions.

By following this structure, you’ll create a well-organized llms.txt file for Your New Site, making it easier for LLMs to navigate and understand your content.

Also Read: Best AI SEO tools

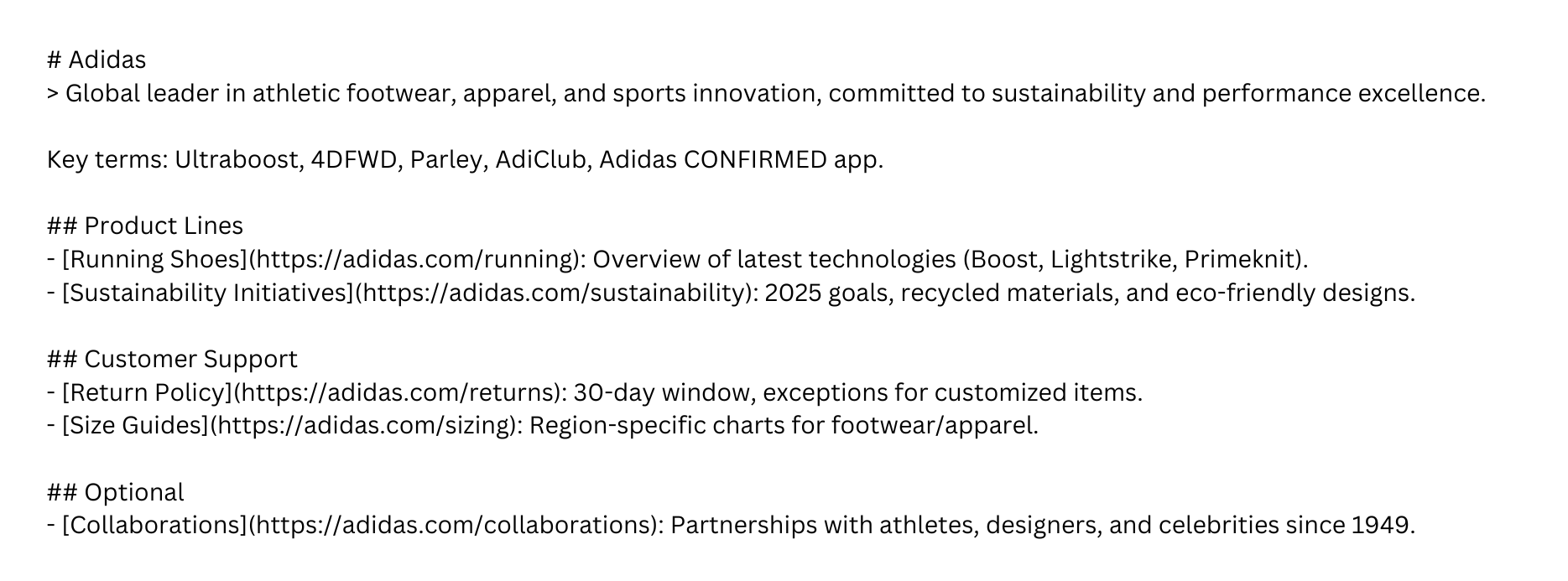

An example of llms.txt for Adidas:

Above would direct LLMs to technical product details, policies and optional historical content.

How to Access llms.txt via API –

1. Use Your API Tool:

- Open an API testing tool like Postman or Insomnia.

2. Enter the Website URL:

- Type the URL in the below format:

https://yournewsite.com/llms.txt - This sends a GET request to access the file.

3. API Request Examples

- Using Python:

import requests

url = “https://yournewsite.com/llms.txt”

response = requests.get(url)

if response.status_code == 200:

print(“LLMS.txt Content:\n”)

print(response.text)

else:

print(f”Failed to fetch llms.txt. Status code: {response.status_code}”)

- Using JavaScript (Node.js):

const axios = require(‘axios’);

const url = ‘https://yournewsite.com/llms.txt’;

axios.get(url)

.then(response => {

console.log(‘LLMS.txt Content:’);

console.log(response.data);

})

.catch(error => {

console.error(‘Error fetching llms.txt:’, error);

});

That’s it! This lets you fetch and review llms.txt rules via API.

Also Read: Google’s Gemini 2.0

Impact of llms.txt on SEO

1. Content Monetization Potential:

With llms.txt, website owners can turn their content into a monetizable asset. Blogs, videos, and articles that were previously free for AI models to crawl can now be protected or selectively shared. By blocking AI from unauthorized use, businesses can charge AI companies for access, creating a new revenue stream. This adds a layer of value to original content, making it a potential source of profit.

2. Selective AI Sharing for Authority:

llms.txt enables platform-specific content permissions, allowing businesses to share their content with trusted platforms (e.g., Google) while blocking it from less reputable or lower-quality AI models. This selective sharing helps protect content integrity and ensures only authorized platforms benefit from the data, potentially boosting rankings on preferred search engines.

3. Enhanced Content Privacy and Protection:

SEO has traditionally focused on visibility and discoverability, but with llms.txt, businesses can now prioritize content privacy. This file prevents unauthorized AI models from training on proprietary content, safeguarding intellectual property. By limiting AI access, businesses can retain the competitive advantage of their unique content.

4. Optimizing for AI Search:

Just as websites optimize for search engines, llms.txt introduces the need to optimize for AI models. Businesses will strategically define permissions, allowing certain AI systems to access their content for improved visibility, while restricting others. This could impact how content appears in AI-generated search summaries, making AI SEO strategies essential.

5. Improved Control Over Content Usage:

The llms.txt file gives businesses greater control over how their content is used and displayed in AI-generated results. This ensures that only authorized AI platforms showcase their content, reducing the risk of misinformation or unauthorized content replication.

llms.txt adds a new dimension to SEO by balancing visibility with control, creating monetization opportunities, and optimizing content for AI-specific indexing.

Also Read: How AI Is Reshaping SEO

What’s next?

With the development of AI tools, integrating with llms.txt into your website plan is becoming necessary. It enables website owners to manage AI access, ensuring the availability of only appropriate content to machine learning models. llms.txt improves data accuracy, maintains privacy, and enhances content relevancy when AI retrieves information by offering a clear, structured guide for LLMs.

Going forward, as web interactions are driven by artificial intelligence, being an early adopter of llms.txt will benefit companies with a competitive advantage through greater discoverability, credibility, and accessibility for their content on AI platforms.